Building an AI SaaS product on a shoestring budget with aws serverless (Part 2)

Introduction

This is the second part of our series on building an AI SaaS product. If you haven’t read Part 1 then please do as this will make much more sense. Just to recap we’ve identified the key things that we need to take into account when designing and building our new platform. So sit back step back into my shoes and I’ll take you through the next steps of the process which reaches a point where we know what services we are going to use and what our development and deployment set up is going to be.

Building Blocks

Things still feel a little overwhelming so you’ve decided to the sensible thing and start breaking the problem down into smaller chunks. The first thing we’re going to do is work out what the most important capabilities are that we need and then see if we can identify a possible aws service that we can use to fulfil that requirement.

For that purpose I’ve picked out 3 examples that we can look at in some further detail:

Dataset upload: We know that our users are going to upload files of data through a web interface and we’ll need to store the file so that we can use it later for doing things like training models or calculating statistics. We’ll also need to capture some metadata such as a name/label, who uploaded it and when it was uploaded and then link that metadata back to the actual file so that we can then display that to the user. This is an easy one to start with and AWS S3 sounds like the obvious place to store the datafile, as it doesn’t care what format it is, it’s cheap to use and we don’t need the file retrieval to be ultra-low latency. The metadata, on the other hand, is a bit different because that’s going to be displayed directly in our UI so we do want that to be pretty fast. Traditionally this would be in a sql database like Aurora, but since we’re favouring serverless then DynamoDB could be a better choice. As for the file upload itself, AWS provide a handy javascript library called Amplify that can handle that in the user interface.

User login: Our users remember are going to be employees in an organisation. The data of the organisation needs to be shared amongst all of its employees so our access controls need to be able to manage that. Furthermore we need to ensure that our customers can set certain security controls to meet their standards so there’s a minimum password strength and MFA support. Now what are your options? Well Cognito is the service that handles application level authentication and authorisation, and on the face of it seems like a reasonable fit, but the more you look into it the more you realise that it’s designed more for a B2C setting where a customer is a single individual and there is no such thing as an organisation level. Nevermind, you dig a little deeper and with a bit of coercing it looks like there are some workarounds that you can implement that will support your requirements. The only other alternative at this stage is to go with an external service like Auth0 and that sounds like a lot of hard work so let’s not go there you convince yourself.

Model API Prediction: Here we want to create a REST based API that’s capable of working with some JSON based input which comes from a user request. This request is passed on to a trained model, getting the prediction and returning the result. Our models aren’t going to huge, but they are going to be neural networks developed using the Pytorch library. The response needs to be pretty quick as we’re dealing with a real time process here. On the face of it using API Gateway with a Lambda function to handle the request would seem like the obvious route to take, however there’s a problem, which is that for our logic to run, we need our tiny Lambda function to load a number of dependencies including Pytorch, and that’s a problem because Lambda functions remember are designed for lightweight tasks and the Lambda function just doesn’t have the capacity to store dependencies of that size. So what are the alternatives? AWS Sagemaker is an option, but at the time, it only had the ability to serve one model endpoint and since our customers could have hundreds of models this isn’t going to work. We have to look further down towards the server based services, at which point we see AWS Fargate, which is a docker container based service that can manage clusters of docker images in a serverless like way. You have to provide the docker image and tell it what virtual resources to assign to containers, but there’s no EC2 setup. Because there’s no limit on the size of the docker image the dependencies aren’t going to be a problem. So as long as our service can spin up containers that will host some web-server like Flask or Django we should be good. There’s even an auto-scaling option which means that the service will automatically spin up more containers depending on the load. Sounds like we have a potential solution.

3. Development setup

Change deployment process

We’re at a point now where we have a pretty good idea of the overall architecture that we need to implement and the services that we’ll use. Is it complete, no way, but if we tried to design it all up front we’d still be building it today. The next thing we need is a development environment and going back to our list of considerations in part 1 it seems like it’s going to pretty important to get our pipeline sorted out so we can develop, test and push to production without tripping up over ourselves. Now source control is critical and we already use GitHub so that’s the logical choice to go with. However, the part I want to focus on here is something called Infrastructure as Code.

What do I mean by infrastructure? Well just about anything really, databases, api gateways, eventing systems, queueing system, log setups, and everything that we identified in the previous section. Infrastructure as Code, is defining all of this as code so that you can keep track of it in your source control system and then deploy it to an AWS account of your choosing. It works through guess what another AWS service called CloudFormation which accepts input called templates from yml files. The kicker is that these CloudFormation templates are like reading Sankrit, and believe me I’m no Indiana Jones. Fortunately there’s a solution in the form of serverless.

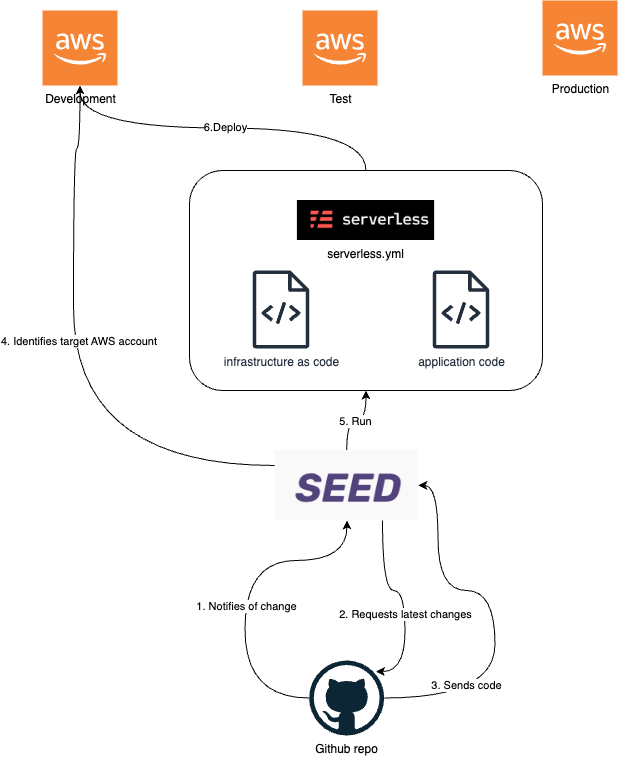

So hold on because things are going to get confusing, but bear with me because it will be worth it I promise. There is an open source library called serverless.com (not to be confused with AWS serverless services), and their solution makes the process of defining infrastructure so much more straightforward than using CloudFormation templates directly. Essentially, the process you follow is to create a directory that will contain everything you need for a micro-service and then you create a single yml template that will define all the infrastructure and your function logic which is actually pretty straightforward. When you’re ready to deploy you call a command that will push it to an AWS environment. Serverless.com will convert everything to the CloudFormation templates and deploy it for you. It’s really simple and there are other options on the market like Terraform and more recently SST, but for us this was perfect.

To make things even easier we also subscribed to seed.run which is an online service that will automatically deploy to AWS environments using the serverless templates and can be configured to trigger on updates to Github branches. It’s simple and completely automates deployment across all the Aws environments that we need and will ever need.

5. Micro-services

Now micro-services have had a lot of bad rap in recent years for overcomplicating the overall architecture, slowing down development, making things more complicated and therefore more expensive to maintain and develop in the future. To some extent I agree particularly if you really put the micro in microservices and end up with hundreds of the little critters all over the place each isolated from each other with the autonomy to do as the please. That gives me a headache just thinking about it, but if you’re sensible and break your services down into high level domain components like user and model for example I’ve found that it doesn’t really require any additional overhead to manage. I also think that when you’re using serverless services which are by nature simple to keep separated it’s much easier to go down the micro-service route. So yes you’re going to use micro-services. Is it perfect? No. But as with everything it’s a trade off and if we were to build our platform on Kubernetes then maybe we’d come to a different decision that that’s a different story.

6. Service Reliability and Security

Now as we suspected in the previous post, AWS doesn’t take all the load off service reliability and security but we can at least get some help courtesy of what they call the AWS Shared Responsibility model and the good news is using serverless services really helps us out.

aws shared responsibility model

This is essentially the agreement that defines where the boundary of responsibility sits between us and AWS. As you might expect AWS are responsible for all the physical security, the hardware and some software. The more “serverless” we are the more AWS are responsible for. For example, if we were to use EC2 then we’d be responsible for the Operating system, but with a service like Lambda we don’t have to because there is no operating system for us to manage. That doesn’t mean that it doesn’t exist, it just means that AWS will manage that for us. So by using serverless services we are minimising the things that we are responsible for and that reduces our risk and costs because we don’t need to spend money maintaining those systems.

Another thing that is worth pointing out, is that for things that are in our control like encryption at rest and firewall configuration there are options that AWS provide that we can use to address those areas of concern. Server-side encryption on S3 and DynamoDB is as simple as selecting an option and a firewall that addresses the main OWASP vulnerabilities can be set up in a service called WAF. There are other services too that can provide threat detection services, auditing, user activity tracking, secrets management, encryption in transit, backup and more. It takes a bit of time to research it all, but it’s not expensive to setup and doesn’t cost a lot to maintain. From our point of view it really makes sense to get this all sorted as part of the development process.

7. Monitoring and Observability

AWS provides a couple of services that handle activity and user logging, CloudWatch, CloudTrail and X-ray. Of these CloudWatch is probably where you’ll head to in the event of a application error type problem, however it’s not the easiest interface to work with and sometimes finding the issue can feel like finding a needle in haystack. There are other vendors that provide applications that do a better job of alerting you to the problem and then presenting the information in a format that makes it much easier to diagnose. Having looked into it, for a few dollars a month you decide that investing in a sentry.com subscription makes a lot of sense as it means we won’t be wasting time trawling through logs on the production system.

8. Next time

So in part 3 we’ll look at the build process and some of the complications that we encountered and see the project through to delivery.