Building an AI SaaS product on a shoestring budget with aws serverless (Part 3)

Introduction

This is the third part and final part of our series on building an AI SaaS product. If you haven’t read Parts 1 and 2 then please do as this will make much more sense. Just to recap we’ve identified broadly what our capabilities are and what aws services are to be used to support those capabilities. We’ve designed an automated deployment process using Infrastructure as Code with the help of serverless.com and seed.run which should eliminate the need to make manual changes in an AWS account. We’ve got some micro-services worked out and have some answers to the things that we considered in part 1. Now let’s pick up the story as we go through the rest of the development process and encounter some of the realities of using AWS services.

What we did

Now I know that it seems like an age to get to this point, but in terms of actual time it really wasn’t. I’m not going to bore you with weekly updates so let’s just cut to the chase and I’ll tell you what we did.

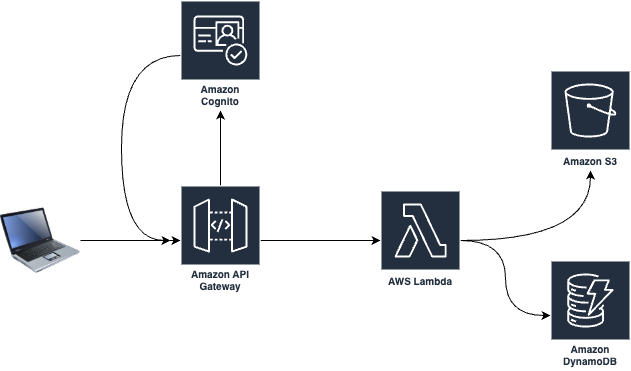

basic infrastructure architecture

The basic architecture diagram is an illustration of the default concept that we used where we could. The flow goes as follows: a user interacts with a web-application and it makes calls to a REST api delivered through API Gateway. The requests are then authenticated by Cognito and we confirm that the request has come from a valid user. We then route the request to an AWS Lambda function that will perform the required logic of the request, which may involve retrieving or creating some data in DynamoDB or S3. When that’s all done the response is sent back to the web application. It’s super simple and thanks to AWS’s Free Tier it really doesn’t cost much if anything to run this setup.

Now this is not an extensive description of everything that we did. From memory we used about 30 different AWS services in total at the end of our initial round of development. I know that we haven’t discussed the front-end development, which was a React app and nor have we discussed the details of the docker image with the logic for the machine learning capabilities, but since this is a post about AWS serverless I’m going to try and stay on this one topic.

Tenancy Isolation

In the end, we decided to go down the route of having a single stack and enforcing tenancy isolation through logic. This meant having things like a customer id field in DynamoDb and doing the same with folder names in S3. We had some guidance from Tod Golding at AWS and you can find a talk of his here on the subject. We spent a lot of time thinking about how this was going to work and how robust and resilient our solution was going to be. To my mind it was critical to get this absolutely right so this was really one part of our architecture that we had designed before we started laying down any other code.

GDPR / Regulator Compliance

This was one area that in the end we got some additional help with through an AWS partner who helped us set up appropriate levels of maintaining audit trails and activity tracking. For a few days consulting this was, in my view, a worthwhile investment in an area that we really didn’t want to get wrong.

Security

Thanks to the automated deployment processes that we followed, the need for any sort of access to the production system was greatly reduced. When we do need access there are best practices we follow such as using MFA authentication and limiting access to only the bare services required.

Tenant Onboarding

This was actually much simpler than we had initially feared. Our DynamoDb database stored all of our metadata for the objects that we wanted in our domain model. So we created a “tenant” domain object and every time we wanted to provision a new tenant we had an app, accessible by the ops team, which created the necessary metadata in DynamoDb and a small number of additional pieces of infrastructure. It’s actually pretty simple and has enabled us to do some cool things like have feature enablement where we can switch on additional features for customers.

Monitoring and Observability

We mentioned in our previous post that we chose sentry.com to do much of the monitoring for us and for most exceptional things it works pretty well. There are still cases when we need to go trawling through Cloudwatch logs to get to the bottom of an issue and that’s a bit of a pain. The idea that we had to add in some logic to monitor customer activity never happened, unfortunately we just didn’t prioritise it over the rest of functionality. Now in our case I don’t think that’s too much of an issue since we are dealing with organisation customers who we are in regular contact with, but I think if we were building a B2C application then it would really be important to build that into the initial design.

Where serverless excels

Development Speed

We found serverless absolutely great for relatively straightforward areas of functionality. Things that required capturing information on a form from a user for example. Even tasks that are more time consuming and resource intensive can still be done using Lambda functions if the task can be broken down and there are specific patterns that illustrate how to do that. I would even say that where Lambda functions don’t work then being able to fall back on an ECS Fargate task for ad-hoc jobs such as model training works super well.

Ease of Configuration

Most options that you need to control are simply an option through AWS console or through the CLI. Most importantly for us however, we can just set those options as configuration through our serverless.com templates. The more you start to venture towards the server based services the less support there is from serverless.com and then you have to write raw CloudFormation templates or use another services like Terraform. For us, if we spot that a Lambda function needs more resources to run then that’s just one character that we need to change in a template and we’re done.

Costs (with a caveat)

I know I’ve said it before, but I was genuinely amazed at how low our costs were during the first few years. The free tier really makes a big difference and if you can stay below that limit then you obviously get the service for free. Now I will say that there are some gotchas out there that we have had to work through and I’ll cover that in the next section.

Where serverless has a problem

When serverless is not serverless

I think to a certain extent serverless has become a victim of it’s own success. Much like these days where every Crypto cowboy has switched to brand themselves as a Generative AI guru, something very similar has happened with Serverless and all of a sudden AWS was marketing new serverless offerings all over the place. The problem was that to my mind, at least, they weren’t serverless and I’ll illustrate this with an example. AWS OpenSearch is a fork of ElasticSearch the popular searching database. A few years ago they released a serverless version of it, but the problem was that the pricing model was structured in such a way that you paid a fee based on what resources were allocated for to it to run and resources are required continuously to make it run. So, you’re paying for it regardless of whether it’s being used or not. Worse still, is that there was some auto-scaling functionality attached to it which meant that if AWS decided the demand was too high then it would increase the resources allocated to it and so your costs increased and then for some reason the auto-scaling took minutes or hours to scale back down again. It was a nightmare and I can remember when we first implemented it, when our monthly bill came through, OpenSearch accounted for more than all the other services combined. So the moral of the story is just because it say’s it’s serverless it doesn’t necessarily mean that it is, and I would recommend that you fully understand the pricing implications otherwise it could be a costly mistake.

When you need to start relying on more server based services

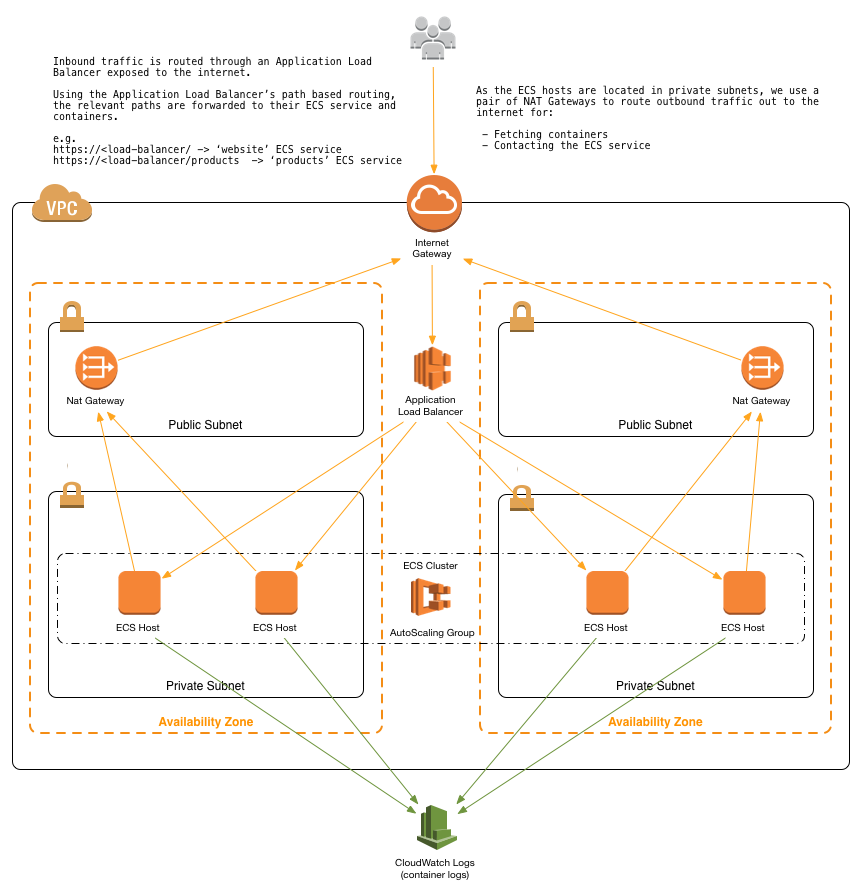

Recall that in part 2 we mapped out a solution for the real time prediction API that was going to use the ECS service. Well on the face of it that doesn’t sound too bad. Sure there’s a bit of work to do to make the docker image and configure with a web server that can respond to requests but nothing too taxing. But here’s the thing, if we wanted the api to be publicly accessible to the world and only have a very limited capacity then that would be all we’d need. But we don’t. We want it to be secure and we want it to be able to scale with demand. For that to happen here’s a picture of what you actually need:

ECS Reference Architecture

Actually, this picture oversimplifies the actual infrastructure you need because it doesn’t show half of the components. Hmm you know what this looks like? …. yep you guessed it a Network. Ahhhhhhhhhhh! We’ve come all this way using serverless wherever we can and the one tiny, minuscule little piece of the application that we can’t do in serverless and we end up with this monstrosity! After all that we need a network guy after all. Of course I’m exaggerating, but here’s the point: Your architecture is only as serverless as the least serverless component. Meaning that if you need the odd server based component then you need the resources with the skills to maintain it, and you accept the added risk to security that comes with the possibility of getting this wrong. In part 1 I talked about a security review with a prospect, well the scenario was obviously made up, but believe me security reviews are very real and the last one I had, 90% of the time was spent on this network, because they obviously recognised that is where there is the greatest risk.

This for me is the biggest problem with the serverless model. To reap the benefits of serverless you really need all your services to be truly serverless. If that’s not possible, then its benefits really start to diminish quite quickly and in that scenario I think I’d also consider other options like Kubernetes.

What I’d do differently next time.

Of all the components in the system, the thing that niggles me most is that Cognito service. We got it working ok and it does what we need it to do, but it’s clearly not designed for our type of customer model. This means that as we’ve added more capabilities like single sign on with external vendors it has been really difficult to configure in a way that is safe and secure. In reality I really should have investigated the viability of using an alternative service that would have better suited our needs. if you get the feeling that the service isn’t right, then don’t waste time trying to find workarounds, use the time to find a better alternative even if that means going off the platform.

Conclusion

There we are, you’ve made it to the end of our development process for our first version and you’re setup to embark on a roadmap of enhancements and new features.

Contact

Are you facing challenges with serverless architecture or do you need some help with adopting AI in your organisation? If you do we’d love to hear from you so please reach out and contact us.